You can use Hive Parameters with HDInsight through Powershell: http://andyelastacloud.azurewebsites.net/?p=1532

You can customise even transient HDInsight clusters with Powershell: http://andyelastacloud.azurewebsites.net/?p=1482

You can use Hive Parameters with HDInsight through Powershell: http://andyelastacloud.azurewebsites.net/?p=1532

You can customise even transient HDInsight clusters with Powershell: http://andyelastacloud.azurewebsites.net/?p=1482

Check out http://www.windowsazureconf.com! I (Andy) will be speaking at this great conference on a topic that’s very close to my heart – the integration of devices and the openness of Windows Azure. It’ll be a fun session that I’m giving from Redmond, so hopefully the jetlag will be bearable! 🙂

On November 14, 2012, Microsoft will be hosting Windows AzureConf, a free event for the Windows Azure community. This event will feature a keynote presentation by Scott Guthrie, along with numerous sessions executed by Windows Azure community members. Streamed live for an online audience on Channel 9, the event will allow you to see how developers just like you are using Windows Azure to develop applications on the best cloud platform in the industry. Community members from all over the world will join Scott in the Channel 9 studios to present their own inventions and experiences. Whether you’re just learning Windows Azure or you’ve already achieved success on the platform, you won’t want to miss this special event.

Find out more and sign up here http://windowsazureconf.net.

This article is pitched at a highly technical audience who are already working with Azure , StoryQ and potentially Selenium / Web Driver. This article primarily builds on a previous Article we wrote in this series, in which we explain all the frameworks listed above. If they are unfamiliar to you we would suggest reading through this article first:

Agile path ways into the Azure Universe – Access Control Service [ACS] [http://blog.elastacloud.com/2012/09/23/agile-path-ways-into-the-azure-universe-access-control-service-acs/]

For those who are completely new to test driven concepts , we might also suggest reading through the following article as an overview to some of the concepts presented in this series.

Step By Step Guides -Getting started with White Box Behaviour Driven Development [http://blog.elastacloud.com/2012/08/21/step-by-step-guides-getting-started-with-specification-driven-development-sdd/]

In this article we will focus on building a base class which will allow the consumer to produce atomic, and repeatable Microsoft Azure based tests which can be run on the local machine.

The proposition is that, given a correctly installed machine with the right set of user permissions and configuration we can check out fresh source from our source control repository and execute a set of tests to ensure the source is healthy. We can then add to these tests and drive out further functionality safely in our local environment prior to publication to the Microsoft Azure.

The one caveat to this proposition is that due to the nature of the Microsoft Azure Cloud based services, there is only so much we can do in our local environment before we need to provide our tests with a connection to Azure assets (such as ACS [Access Control Service] , Service Bus). It should be noted that Various design patterns outside the scope of this article can substitute for some of these elements and provide some fidelity with the live environment. The decision on which route to take on these issues is project specific and will be covered in further articles in coming months.

Our own development setup is as follows:

·Windows 8 http://windows.microsoft.com/en-US/windows-8/release-preview

· Visual Studio 2012 http://www.microsoft.com/visualstudio/11/en-us

· Resharper 7 http://www.jetbrains.com/resharper/whatsnew/index.html

· NUnit http://nuget.org/packages/nunit

· NUnit Fluent Assertions http://fluentassertions.codeplex.com/

– Azure SDK [Currently 1.7] http://www.windowsazure.com/en-us/develop/net/

We find that the above combination of software packages makes for an exceptional development environment. Windows 8 is by far the most productive Operating System we have used across any hardware stack. Jet Brains Resharper has become an indispensable tool, without which Visual Studio feels highly limited. NUnit is our preferred testing framework, however you could use MBUnit or XUnit. For those who must stick with a pure Microsoft ALM experience you could also use MSTest.

The Microsoft Azure toolset includes the Azure Emulator , this tool attempts to offer a semi faithful experience in the local development environment of a deployed application scenario on windows Azure. This is achieved by the emulation of Storage and Compute on the local system. Unfortunately, due to the connected nature of the Azure platform in particular the service bus element , the Emulators ability is some what limited. In the Test Driven world a number of these limitations can be worked around by running in a semi-connected mode (where your tests still have a dependency on Microsoft Azure and a requirement to be connected to the internet ) for the essentials that cannot be emulated locally.

With forward thinking , good design and Mocking / Faking frameworks it is possible to stimulate the behaviour of the Microsoft Azure connected elements. In this scenario every decision is a compromise and there is no Right or Wrong answer, just the right answer at that time for that project and that team.

Even with the above limitations the Emulator is a powerful development tool. It can work with either a local install of IIS or IIS express. In the following example we will pair the emulator with IISExpress. We firmly believe in reducing the number of statically configured elements a developer has to have to run a fresh check out from source control of a given code base.

The first task is to set up some configuration entry’s to allow the framework to run the emulator in a process , these arguments define:

The first step is to add a app.config file to our test project

Note – a relative root can be configured for the CSX and the Service Configuration file , in this example to keep things explicit we have not done this.

[csx]

The second argument we need to configure is the path to the package, for our solution this looks like:

The third argument we need to set up is the path to the services configuration file. For our solution this looks like this:

The final argument we need is to tell the emulator to use IISExpress as its web server:

The final Configuration group:

Now we have the argument information captured in the application configuration file we need to build this information into an argument string. We have done this in our Test / Specification base:

In the TestFixtureSetup [called by child test classes]

Now we have all the information we need to pass to our process to execute the emulator; our next stage is to start a process and host the emulator using the arguments we have just defined.

The code is relativity trivial

Since Azure SDK 1.4 , Role projects have not automatically created package contents for azure. We need this to happen so that we can use a small code fragment which we add to the azure project file.

Reference Posts

http://andrewmatthewthompson.blogspot.co.uk/2011/12/deploying-packages-to-azure-compute.html

Now with the emulator + iisexpress running we are free to execute our tests / specifications from our child test / specifications fixtures.

Task 6 – Shutting down the emulator

The emulator and the service can be quite slow in ending; it is possible to use the arguments in the following article to remove the package and close the emulator down. However we have encountered issues with this so instead we prefer to kill the process. We suggest you find out which one works of these approaches works best for your situation. Reference Article http://msdn.microsoft.com/en-us/library/windowsazure/gg433001.aspx Our code

You must configure azure to use IISExpress at a project level The emulator does not like being shut down manually half way through a test run. If you have to do this make sure you end the service process also. This is an advanced technique with a number of moving parts, as such it is advised that you should pactise it on a piece of spike code before using it on a production project.

This technique is limited by the constraints of the SDK and the Emulator

Recap –What have we just done

This has been quite an advanced topic , to define these steps has been a journey of some research and experimentation that we hope to save the reader.

The purpose of this technique is to try to empower the principal that a developer should be able to simply check out source on a standard development configured machine and the tests should just run. They should be atomic , include their own setup and teardown logic and when they are finished they should leave no foot print. There is no reason we should break this principal just because we are coding against the cloud.

It is also important that tests are repeatable and have no side effects. Again the use of techniques such as those demonstrated in this short article help to empower this principal.

Happy Test driving

Beth

Twitter @martindotnet

Elastacloud Community Team

It’s been a while since I’ve even looked at Fluent Management but I wanted to add some things to it over the last couple of months and HPC, Big Data and general consulting and product development has kept me too busy as well as the continual release cycle of the Azure product teams who are putting out some incredible things and keeping me learning on a daily basis!

Anyway, a couple of things I’ve wanted to add to fluent management since June are OPC packaging deconstruction so that the reliance on msbuild can be phased out and there can be a clean addition of SSL certificates and VM Size updates without the rebuild. Obviously, like many things and many of you, I’m half way through this. It will get finished hopefully in time for the next Azure SDK release which is, I hope, not too far away.

In the interim I decided to write a Linq provider given that we’re beginning to use more queries against Azure in our own applications and this is much better mechanism to query data than the Fluent API. At the moment it only supports queries to storage services but I’ll do a drop which extends this to Cloud Services.

This weekend I’m opening up the source and pushing to Github. It’s been on Bitbucket since its inception but not a lot of people use Mercurial anymore so I thought we’d move with the times. It would be good to get some community involvement with the development of Fluent Management anyway. Some people in our UK community have offered and their support which will be most welcome!

Here is a small snippet of code which will allow you to pull back a named storage account with keys. This week I’ll do another drop to add storage account metadata to the storage structure so that there is everything you need to know returning from a single filtered query.

var inputs = new LinqToAzureInputs()

{

ManagementCertificateThumbprint = TestConstants.LwaugThumbprint,

SubscriptionId = TestConstants.LwaugSubscriptionId

};

var queryableStorage = new LinqToAzureOrderedQueryable<StorageAccount>(inputs);

var query = from account in queryableStorage

where account.Name == "<my storage account>"

select account;

Assert.AreEqual(1, query.Count());

var storageAccount = query.FirstOrDefault();

Debug.WriteLine(storageAccount.Name);

Debug.WriteLine(storageAccount.Url);

Debug.WriteLine(storageAccount.PrimaryAccessKey);

Debug.WriteLine(storageAccount.SecondaryAccessKey);

Happy trails. I’ll publish the repo url in he next couple of days for anyone interested.

Recently I worked on a Service Management problem with my friend and colleague Wenming Ye from Microsoft. We looked at how easy it was to construct a Java Service Management API example. The criteria for us was fairly simple.

Simple right? Wrong!

Although I’ve been a Microsoft developer for all of my career I have written a few projects in Java in the past so am fairly familiar with Java Enterprise Edition. I even started a masters in Internet Technology (University of Greenwich) which revolved around Java 1.0 in 1996/97! In fact I’m applying my Java experience to Hadoop now which is quite refreshing!

I used to do a lot of Crypto work and was very familiar with the excellent Java provider model established in the JCE. As such I thought that the certificate management would be fairly trivial. It is but there are a a few gotchas. We’ll go over them now so that we can hit the problem and resolution before we hit the code.

The Sun Crypto provider has a problem with loading a PKCS#12 struct which contains the private key and associated certificate. In C# System.Cryptography is specifically built for this extraction and there is fairly easy and fluent way of importing the private key and certificate into the Personal store. Java has a keystore file which can act like the certificate store so in theory the PKCS#12/PFX (not the same exactly but for the purposes of this article they are) resident in the .publishsettings can be imported into the keystore.

In practice the Sun Provider doesn’t support unpassworded imports so this will always fail. If anybody has read my blog posts in the past you will know that I am a big fan of BouncyCastle (BC) and have used it in the past with Java (it’s original incarnation). Swapping the BC provider in place of the Sun one fixes this problem.

Let’s look at the code. To being we import the following:

import java.io.*; import java.net.URL; import javax.net.ssl.*; import javax.xml.parsers.DocumentBuilder; import javax.xml.parsers.DocumentBuilderFactory; import javax.xml.parsers.ParserConfigurationException; import org.bouncycastle.jce.provider.BouncyCastleProvider; import org.bouncycastle.util.encoders.Base64; import org.w3c.dom.*; import org.xml.sax.SAXException; import java.security.*;

The BC import is necessary and the Xml namespaces used to parse the .publishsettings file.

We need to declare the following variables to hold details of the Service Management call and the keystore file details:

// holds the name of the store which will be used to build the output private String outStore; // holds the name of the publishSettingsFile private String publishSettingsFile; // The value of the subscription id that is being used private String subscriptionId; // the name of the cloud service to check for private String name;

We’ll start by looking at the on-the-fly creation of the Java Keystore. Here we get a Base64 encoded certificate and after adding the BC provider and getting an instance of a PKCS#12 keystore we setup an empty store. When this is done we can decode the PKCS#12 structure into a byte input stream, add to the store (with an empty password) and write the store out, again with an empty password to a keystore file.

/* Used to create the PKCS#12 store - important to note that the store is created on the fly so is in fact passwordless -

* the JSSE fails with masqueraded exceptions so the BC provider is used instead - since the PKCS#12 import structure does

* not have a password it has to be done this way otherwise BC can be used to load the cert into a keystore in advance and

* password*/

private KeyStore createKeyStorePKCS12(String base64Certificate) throws Exception {

Security.addProvider(new BouncyCastleProvider());

KeyStore store = KeyStore.getInstance("PKCS12", BouncyCastleProvider.PROVIDER_NAME);

store.load(null, null);

// read in the value of the base 64 cert without a password (PBE can be applied afterwards if this is needed

InputStream sslInputStream = new ByteArrayInputStream(Base64.decode(base64Certificate));

store.load(sslInputStream, "".toCharArray());

// we need to a create a physical keystore as well here

OutputStream out = new FileOutputStream(getOutStore());

store.store(out, "".toCharArray());

out.close();

return store;

}

Of course, in Java, you have to do more work to set the connection up in the first place. Remember the private key is used to sign messages. Your Windows Azure subscription has a copy of the certificate so can verify each request. This is done at the Transport level and TLS handles the loading of the client certificate so when you set up a connection on the fly you have to attach the keystore to the SSL connection as below.

/* Used to get an SSL factory from the keystore on the fly - this is then used in the

* request to the service management which will match the .publishsettings imported

* certificate */

private SSLSocketFactory getFactory(String base64Certificate) throws Exception { KeyManagerFactory keyManagerFactory = KeyManagerFactory.getInstance("SunX509");

KeyStore keyStore = createKeyStorePKCS12(base64Certificate);

// gets the TLS context so that it can use client certs attached to the

SSLContext context = SSLContext.getInstance("TLS");

keyManagerFactory.init(keyStore, "".toCharArray());

context.init(keyManagerFactory.getKeyManagers(), null, null);

return context.getSocketFactory();

}

The main method looks like this. It should be fairly familiarly to those of you that have been working with the WASM API for a while. we load and parse the XML, add the required headers to the request, send it and parse the response.

ServiceManager manager = new ServiceManager();

try {

manager.parseArgs(args);

// Step 1: Read in the .publishsettings file

File file = new File(manager.getPublishSettingsFile());

DocumentBuilderFactory dbf = DocumentBuilderFactory.newInstance();

DocumentBuilder db = dbf.newDocumentBuilder();

Document doc = db.parse(file);

doc.getDocumentElement().normalize();

// Step 2: Get the PublishProfile

NodeList ndPublishProfile = doc.getElementsByTagName("PublishProfile");

Element publishProfileElement = (Element) ndPublishProfile.item(0);

// Step 3: Get the PublishProfile

String certificate = publishProfileElement.getAttribute("ManagementCertificate");

System.out.println("Base 64 cert value: " + certificate);

// Step 4: Load certificate into keystore

SSLSocketFactory factory = manager.getFactory(certificate);

// Step 5: Make HTTP request - https://management.core.windows.net/[subscriptionid]/services/hostedservices/operations/isavailable/javacloudservicetest

URL url = new URL("https://management.core.windows.net/" + manager.getSubscriptionId() + "/services/hostedservices/operations/isavailable/" + manager.getName());

System.out.println("Service Management request: " + url.toString());

HttpsURLConnection connection = (HttpsURLConnection)url.openConnection();

// Step 6: Add certificate to request

connection.setSSLSocketFactory(factory);

// Step 7: Generate response

connection.setRequestMethod("GET");

connection.setRequestProperty("x-ms-version", "2012-03-01");

int responseCode = connection.getResponseCode();

// response code should be a 200 OK - other likely code is a 403 forbidden if the certificate has not been added to the subscription for any reason

InputStream responseStream = null;

if(responseCode == 200) {

responseStream = connection.getInputStream();

} else {

responseStream = connection.getErrorStream();

}

BufferedReader buffer = new BufferedReader(new InputStreamReader(responseStream));

// response will come back on a single line

String inputLine = buffer.readLine();

buffer.close();

// get the availability flag

boolean availability = manager.parseAvailablilityResponse(inputLine);

System.out.println("The name " + manager.getName() + " is available: " + availability);

}

catch(Exception ex) {

System.out.println(ex.getMessage());

}

finally {

manager.deleteOutStoreFile();

}

}

For completeness, in case anybody wants to try this sampe out here is the rest.

/* <AvailabilityResponse xmlns="http://schemas.microsoft.com/windowsazure"

* xmlns:i="http://www.w3.org/2001/XMLSchema-instance">

* <Result>true</Result>

* </AvailabilityResponse>

* Parses the value of the result from the returning XML*/

private boolean parseAvailablilityResponse(String response) throws ParserConfigurationException, SAXException, IOException {

DocumentBuilderFactory dbf = DocumentBuilderFactory.newInstance();

DocumentBuilder db = dbf.newDocumentBuilder();

// read this into an input stream first and then load into xml document

@SuppressWarnings("deprecation")

StringBufferInputStream stream = new StringBufferInputStream(response);

Document doc = db.parse(stream);

doc.getDocumentElement().normalize();

// pull the value from the Result and get the text content

NodeList nodeResult = doc.getElementsByTagName("Result");

Element elementResult = (Element) nodeResult.item(0);

// use the text value to return a boolean value

return Boolean.parseBoolean(elementResult.getTextContent());

}

// Parses the string arguments into the class to set the details for the request

private void parseArgs(String args[]) throws Exception {

String usage = "Usage: ServiceManager -ps [.publishsettings file] -store [out file store] -subscription [subscription id] -name [name]";

if(args.length != 8)

throw new Exception("Invalid number of arguments:\n" + usage);

for(int i = 0; i < args.length; i++) {

switch(args[i]) {

case "-store":

setOutStore(args[i+1]);

break;

case "-ps":

setPublishSettingsFile(args[i+1]);

break;

case "-subscription":

setSubscriptionId(args[i+1]);

break;

case "-name":

setName(args[i+1]);

break;

}

}

// make sure that all of the details are present before we begin the request

if(getOutStore() == null || getPublishSettingsFile() == null || getSubscriptionId() == null || getName() == null)

throw new Exception("Missing values\n" + usage);

}

// gets the name of the java keystore

public String getOutStore() {

return outStore;

}

// sets the name of the java keystore

public void setOutStore(String outStore) {

this.outStore = outStore;

}

// gets the name of the publishsettings file

public String getPublishSettingsFile() {

return publishSettingsFile;

}

// sets the name of the java publishsettings file

public void setPublishSettingsFile(String publishSettingsFile) {

this.publishSettingsFile = publishSettingsFile;

}

// get the value of the subscription id

public String getSubscriptionId() {

return subscriptionId;

}

// sets the value of the subscription id

public void setSubscriptionId(String subscriptionId) {

this.subscriptionId = subscriptionId;

}

// get the value of the subscription id

public String getName() {

return name;

}

// sets the value of the subscription id

public void setName(String name) {

this.name = name;

}

// deletes the outstore keystore when it has finished with it

private void deleteOutStoreFile() {

// the file will exist if we reach this point

try {

java.io.File file = new java.io.File(getOutStore());

file.delete();

}

catch(Exception ex){}

}

Last thing to say. Head to the BouncyCastle website to download the package and provider and in this implementation Wenming and I called the class ServiceManager.

Looking to the future and when I’ve got some time I may look at porting Fluent Management to Java using this technique. As it stands I feel this is a better technique than using the certificate store to manage the keys and underlying collection in that you don’t need elevated privileges to interact. A consequence which has proved a little difficult to work with when I’ve been using locked down clients in the workplace or Windows 8.

I’ve been working on both Windows Azure Active Directory recently and the new OPC package format with Fluent Management so expect some more blog posts shortly. Happy trails etc.

The main content of this article depends upon an Azure Service called ‘Access Control Service’. To make use of this service, you’ll need to sign up for an Azure Account. At the time of writing this article there is a 90 day free trial available.

You can find the portal entry page and sign up page at this web address : [ http://www.windowsazure.com/en-us/ ]

The wizard will take you through the sign up process, including signing up for a live account if you do not have one currently.

Azure is Microsoft cloud offering , in very simple terms Microsoft provide huge containers full of hardware across the world and from these hardware clusters, Microsoft offer you the ability to:

In simple terms, Azure gives you the power to focus on writing world class software whilst it handles the hardware and costing concerns. All this power comes with a price tag, but when you take into account the cost of physical data canters Azure is more than competitive. In this article we will only utilise a tiny fraction of the power of Azure, but we would encourage you to explore it in more depth if you haven’t already.

Web Address – [ http://msdn.microsoft.com/en-us/library/windowsazure/gg429786.aspx ]

The Access Control Service offered by Microsoft via the Azure platform is a broker for Single Sign On Solutions. In simple terms this provides the capability for users to Authenticate to use your application. This authentication uses commercial and trusted Identity Providers such as:

http://www.windowsazure.com/en-us/develop/net/how-to-guides/access-control/

http://msdn.microsoft.com/en-us/magazine/gg490345.aspx

Behaviour Driven Development or BDD has been in common use for a number of years, and there are several flavours available in a number of frameworks. The basis of BDD is that we execute living requirements (or in Agile speak, Stories) which have been written in a English readable representation of the required functionality.

Some frameworks take a Domain Specific Language (DSL) approach to defining the requirements that the BDD tests will execute, a common standard is called Gherkin.

DSL : [ http://www.martinfowler.com/bliki/BusinessReadableDSL.html ]

Gherkin : [ http://www.ryanlanciaux.com/2011/08/14/gherkin-style-bdd-testing-in-net/ ]

The framework we will be using in this article is called StoryQ ([ http://storyq.codeplex.com/ ]). We have chosen this framework due to its simplistic approach to specification design, and more importantly its support for coded specifications. To be specific; we write the specification in code and therefore they become part of our living code base.

Although StoryQ provides a nice step by step format and structure to our Stories, it still leaves the problem that the requirements are written from a high level perspective.

As developers, we could implement a White box style approach where by we do not respect the outer borders of the application. Our Tests would then be allowed to interact with the code, and even substitute parts of the code for Testing objects such as Mocks. We speak about this approach in detail in this article.

In this case we have elected to respect the boundaries of the application. We will treat the application as if it is within a black box, into which we can not see or interfere but with who’s public interface (in this case a web page) we can interact.

Having made this decision we now need to find a framework to work with StoryQ. We need to allow our tests to drive a browser and cause it to replicate a users interaction. There are a number of frameworks available , in this case we select the excellent Selenium + Web driver packages from Nuget.

[ http://www.nuget.org/packages/Selenium.WebDriver ].

Our own development setup is as follows:

·Windows 8 http://windows.microsoft.com/en-US/windows-8/release-preview

· Visual Studio 2012 http://www.microsoft.com/visualstudio/11/en-us

· Resharper 7 http://www.jetbrains.com/resharper/whatsnew/index.html

· NUnit http://nuget.org/packages/nunit

· NUnit Fluent Extensions http://fluentassertions.codeplex.com/

– Selenium + Web Driver http://www.nuget.org/packages/Selenium.WebDriver

We find that the above combination of software packages makes for an exceptional development environment. Windows 8 is by far the most productive Operating System we have used across any hardware stack. Jet Brains Resharper has become an indispensable tool, without which Visual Studio feels highly limited. NUnit is our preferred testing framework, however you could use MBUnit or XUnit. For those who must stick with a pure Microsoft ALM experience you could also use MSTest.

In the rest of this article we will demonstrate using Selenium + Web Driver to empower our StoryQ tests. We will show how to represent a User logging into Azure [ACS] prior to them accessing our site.

We will show illustrations of the following

As Test Driven Developers we start with a requirement and a Test –

As A User who is not logged in

When I try to access the site

Then I expect to be taken to a login screen

The Story above reflects the spirit of the original requirement, but actually attacks them in a very pragmatic way. This will not appeal to purist BDD folks, but it is an approach we have found to be very effective when analysing requirements from Business. We often find that some of the set up and Administrator stories have been missed from the planning, and that these are required to empower the pure business Story’s.

We can also see here that the motivation for the ACS integration has changed to the Administrator. This would of typically come out of a conversation with a Business Owner over who this Story benefits and which role would be motivated by the security of the system.

The business would typically readjust their Story pallet to include Stories relevant to User profile and data security that arise from discussion with the business owner. This allows us to refine the Stories provided to the developers and better reflect the businesses intentions. The above situation illustrates the cooperative requirement management process with the Agile space. It is a good example of iterative requirement planning as a requirement passes through different stages of planning.

If we execute a dry run of this story –

we see the following output –

Story is We are forced to login to ACS when trying to access the site

In order to keep the web site secure => (#001)

As a Administrator

I want users to have to authenticate via ACS prior to entry to the web siteWith scenario Happy Path

We have taken the following steps –

If we dry run the story now we see the following output –

Lets take a closer look

We can immediately see what we need to do to make this test pass, and in a very human readable output. This is one of the amazing and empowering features of StoryQ; it’s ability to bridge the gap between technical and business, via its clear and precise output formats.

Note – this is still a bit of a technical story, terms such as ACS would probably need to be refactored or added to a product definition dictionary, for the sake of this article we have kept this wording in.

Next we start to use the test steps defined in the Story to drive out our functional foot print. In the following sections we will start to make formative steps to construct the application. The ACS bridge being built through the Azure side of the configuration will not be covered, as this has been covered in depth by the links supplied in earlier sections of this article.

Now lets get started with the implementation.

This step forces us to use Nuget to bring in the webdriver and selenium implementation we referred to earlier. The main reason we are driven to do this now is because we want to be sure we have no ACS cookies registered with the browser. To delete any cookies that are present we need to make a call to the browser. This also gives us our perfect motivation to bring down a browser automation tool kit.

With the Webdriver in place we can now instantiate it and make sure we have cleared down any cookies and are working with a fresh profile. There are drivers available for Firefox, Internet Explorer and Chrome. In our case we have used Firefox.

With the Driver in place and instantiated we can now make sure it is clear of cookies.

We are being explicit here despite the fact we have just created a new Profile. This is because the step cannot be dependant upon the set code, the driver or profile to remain unchanging. We therefor include a deliberate step to delete all cookies and make sure we are working with a clean browser.

In this step we will see a browser window open up and try to browse to the site. To enable this we are driven to add the following elements –

We now add a ASP MVC 4 project

When we run the story we can see Firefox open and try to navigate to the Home URL, which fails.

We will now add a Unit test to drive out the Home controller and index action.

From this test we drive out the view and the controller, we have added them below for completeness.

The controller and view are skeletal objects, we only implement what we are driven to add by our tests.

We now rerun our test and find that we are green. We are ready to continue our journey.

With the new controller and view in place we will re-run our Step and see if we can reach the site. Unfortunately we find we still fail – this is because the default is for visual studio to use the development server. We can work with this, but when working on the types of sites that we will be running via the Azure Emulator, we prefer to work directly with a IIS or IIS express. Eventually, as mentioned above, we would more than likely be driven by our none functional requirements to implement the Azure emulator. However as we have not been driven there yet, we will configure visual studio to run this site via IIS.

The links below will explain how to configure your choice of web server for your project to run under.

[ http://msdn.microsoft.com/en-us/library/ms178108%28v=vs.100%29.aspx ]

With IIS configured we have proved the potential for this step to succeed. We can see below that without ACS implemented we are successfully able to Test Drive to the Home action of the site.

StoryQ guides our efforts by showing a textual map of what we have done and what we have left to achieve.

To Achieve this step we need to add some acceptance criteria and then we need to configure ACS. First let us set our expectations for this step.

In the code above we have a search to retrieve all div elements on page, followed by a Query to make sure the ACS sign on text can be found in the collection. Note that there are many elements that we could have checked on the page, including a fragment of the URL. As there was a choice, we have chosen to select just enough to get the job done and fix the Query and Criteria if it proves to be problematic.

Note: the selection criteria above will vary dependent upon the Identity providers you have configured. The above criteria works when we have selected multiple identity providers, in this case google and Windows Live.

The next step is to configure the pathways to ACS. For this to succeed you need to have configured an ACS namespace as per the directions supplied on the links earlier in this article.

The Identity and Access Tool can be used to bypass a lot of manual configuration and pain. It is a substantial improvement on the WIF tool kit. Below is a link which provides details of how to configure the tool .

http://visualstudiogallery.msdn.microsoft.com/e21bf653-dfe1-4d81-b3d3-795cb104066e

http://blogs.iqcloud.net/2012/09/federated-authentication-with-azure-acs.html

Note: We have, on occasions, found that we have needed to restart Visual Studio 2012 multiple times when installing this tool, before the Identity and Access option has been available on the context menu.

Note: We have found with our ASP MVC projects when using this tool, that we then need to add some namespaces as reference. You can check what these are by taking a quick peek at the web.config file.

We found we needed to add System.IdentityModel.

If you want to check your configuration , set the ASP MVC project as the start up project and press f5 to run the project. Depending on the identity providers you configured, you should be presented with a challenge page.

Once you have logged in you , you should be presented with your Home page for your ASP MVC Application

With the ACS bridge in place and properly configured, we will now head back to our Story and the step we were completing. We need to rerun the test to check the expectation.

After running the test Firefox starts up and goes to the ACS page. The text inside the div is found and we have another passing step in our story as well as a configured ACS bridge.

Next we will pick an identify provider. We will then login to the ACS page using the web driver and authenticate.

We will use the web driver to login to Windows Live as the Identity Provider.

We can now execute this step

Success – The test now executes the step to take the driver to the Windows Live login page

To provide this functionality we have written a few extension methods. There is no magic here, the secret of this technique is in its simplicity. We need only do the following :

The final step asserts that we are in fact on the home page and we are done

Let us now execute this test and hopefully we should have a green passing story.

Let us just take a moment to take a breath and look back at what we have achieved.

In the following text we will use a number of terms and diagrams that perhaps introduce some complexity, you may not have come across these terms before so I will take a moment to try to explain them.

However there is a subtle variant of object composition called object aggregation, although these are quite often used interchangeably, they are not strictly the same.

Composition is generally used when the object creates the instance of the object being made part of its composed child model. Thus when the root composing object lifeline is terminated, or to put this another way when the root object is destroyed then, the associated child objects which make up the rest of the object graph are also destroyed.

Aggregation differs from object composition in that an object which is aggregated generally has an independent lifeline, and we find quite often it is shared between many objects which aggregate it by reference as part of their object graph. We can therefore say that an aggregated object generally is not explicitly destroyed when a class using that object is destroyed.

Since the emergence of modern architecture principals, [SOP] and [SRP] have been used to identify vertical separation of concern. Taking this into consideration, while applying a Service Orientated Architecture [SOA], we can say that each service has a distinct responsibility. For instance, a ‘Security Service’, or an ‘Order Service’.

Our own development setup is as follows:

·Windows 8 http://windows.microsoft.com/en-US/windows-8/release-preview

· Visual Studio 2012 http://www.microsoft.com/visualstudio/11/en-us

· Resharper 7 http://www.jetbrains.com/resharper/whatsnew/index.html

· NUnit http://nuget.org/packages/nunit

· NUnit Fluent Extensions http://fluentassertions.codeplex.com/

· NSubstitute http://nsubstitute.github.com/

We find that the above combination of software packages makes for an exceptional development environment. Windows 8 is by far the most productive Operating System we have used across any hardware stack. Jet Brains Resharper has become an indispensable tool, without which Visual Studio feels highly limited. NUnit is our preferred testing framework, however you could use MBUnit or XUnit. For those who must stick with a pure Microsoft ALM experience you could also use MSTest.

We also use a mocking library; in this case we have used NSubstitute due to its power and simplicity. However there are other options available with the same functionality, such as Moq, and Rhino Mocks. We feel libraries like Type Mock should be avoided due the lack of respect for the black box model, this lack of respect allows developers to take short cuts which invalidate good practises.

IOC is the practise of inverting the control of a given object. Typically in a scenario that does not use IOC an object is constructed and used by an Aggregating object. The diagram below demonstrates the aggregated relationship.

One of the main aspects of the IOC scenario is that we invert the construction of the Object. In real terms this means that the object is constructed externally to the aggregating object. Inverting the creation of an object through constructing it prior to its use by the consuming / aggregating object has a number of advantages, some of which are listed below:

We can see below a classic representation of composition by in object instantiation –

“That ‘Object A’ creates an instance of ‘Object B’”

What is wrong with the shape above?

The shape above, with Object A instantiating then subsequently aggregating and encapsulating Object B, leads to a scenario were Object B is hidden from the outside world. This leads to a number of issues and we will examine these in a moment. First let us just note that there are two other solutions to this issue which we list below. These fit outside the IOC / DI pallet, and this article will not attempt to address or discuss them as they are outside its scope.

It is worthy of note that there is a huge debate raging about the value of the Service Location Pattern and it is one we have no intention to enter into currently.

Both the solutions above have a tendency to lead to bad practises. The danger when these techniques are used incorrectly is that it can lead to the invalidation of two other very important principals.

One of the issues IOC / DI helps to address is needing to decide which object or block of functionality we should invoke dependent on runtime conditions.

This is a huge issue with direct instantiation of aggregated objects. Let us think about a situation where we have to make a different operation call dependent on some condition, let us for a moment assume it is a condition that is only available to us at run time. We can in fact see this expressed by the following code fragment below.

The code above highlights the situation where a class has to make a decision about which service to call dependent upon a value that is only available at run time. As we can see the contract signatures of the two classes are the same, so effectively the aggregating class is having to instantiate two objects where it only needs one, and making identical calls to one or the other based upon logic which is outside its SOC responsibilities.

The principal difference between the two code examples is that creational control of the DataService being ultimately used by the ManagerService, or in context of this article the Aggregating class, has been Inverted and moved outside the ManagerService. The effect of this is that the class maintains clean, simple code which is in line with the SOC principal when applied in this context.

Working with inversion of control we have to consider how we pass an object, which we have inverted the construction of, into the intended aggregating object. In this section we will consider Dependency Injection and how this resolves and satisfies this requirement.

We can see in the code sample in the last section that we injected the Object to be used via a parameter on the constructor. This is often referred to as constructor injection. There are in fact two common dependency injection implementations:

In the example above, we can see that an object at runtime which corresponds to the IDataService interface needs to be injected into the constructor of the ManagerService. When injected into the constructor at object construction, the service is then stored for later use in an immutable class level field.

This is a classic example of Constructor based Dependency Injection.

The above code shows an exposed setter which has been set up as a Dependency Injection target. In this case we have used Microsoft Unity’s Dependency Attribute to illustrate the property as a Setter injection target, we will talk about injection containers in more depth shortly. In this scenario we can see that the outside system can inject a dependency via a setter – depending on the injection container or composition system being used we can enforce a dependency or mark it as optional.

Setter based injection has been utilized quite often for setting up defaults across an object, the issue with this approach is that it quite often hides bad design choices. We would like to suggest that setter based injection should only be considered if constructor based injection is not appropriate, or if you find yourself in a specialised situation such as utilising MEF [ http://mef.codeplex.com/ ] for View Model / Model injection.

In this short section we have taken a quick tour of dependency injection, and the two methods commonly used to expose dependencies as injection targets. We shall now move on to the real world use of DI and IOC and start to talk about containers and how they empower these principals and provide immense power and flexibility to our codebases.

When designing a modern application using concepts such as Emergent Architecture and some form of test driven development, it becomes a natural process to utilise design principals such as SOC and SRP, this will often mean that we end up with large object graphs which would be inconvenient to manually inject into objects.

Modern development teams utilize IOC Containers such as those listed below

Microsoft Unity supports both Setter Injection and Constructor Injection. We have discussed some of the issues with Setter Injection and due to these issues, the rest of this article will focus on Constructor Injection using the Unity Container.

Let us now Test Drive a simple example using ASP MVC. We will produce an application which makes use of Unity and some community extensions which provide the binding bridge between ASP MVC and the Unity IOC container.

We will now build a trivial application which utilizes

The application will demonstrate a Specification Driven ASP MVC controller. We will utilize SOC by separating our business functionality from our presentation framework. Our final deliverable application will wire in a Unity container with any needed frameworks to provide a working application.

The purpose of the application will be to Base64 encode text fragment.

As always with modern development in an emergent setting we start with a test.

Writing the test above generated an ASP MVC project within which the following artefacts are of interest to our discussion.

The controller shown below has a dependency on the IEncoderService, in our case we are passing in a Base64 Encoder service. We could use the same controller to encode to anything we please just by changing what we pass into the constructor. Below, for your reference, is the base64 encoding service and model.

Once the test has been completed and is passing thusly.

Then it is simply a matter of running the website to see what we need to do next.

We receive an exception page when we ask the ASP MVC framework to load the view, this is because the framework is trying to create the controller. However because we are making use of Dependency Injection and injecting the EncoderService into the controller, the framework can only find a parameterised constructor and therefor is unable to create an instance of the controller. Thus we need to give the framework a little help.

Step 1: We now need to bring in a ASP MVC Bridge to Unity, we could hand-code this but Nuget already has a useful package [Unity.Mvc3] in it is repository which supplies the functionality we need.

Step 2: With the Unity MVC 3 package in place, we now need to tell the application how to use it fortunately we find it has added a Readme file to our solution, within this file we find the following text.

“To get started, just add a call to Bootstrapper.Initialise() in the Application_Start method of Global.asax.cs and the MVC framework will then use the Unity.Mvc3 DependencyResolver to resolve your components.”

As directed above we will now insert a call to the Bootstrapper into the Global.asax

Step 3: With Bootstrapper registered construction is now delegated via the UnityDependencyResolver. This uses the Unity Container, in which we have not yet registered the IEncoderService Interface against concrete implementation, thus we now see a new error when we try to run the site.

Step 4: Is to follow the guidance in the error message and add an implementation for the interface (IEncoderService), which is expressed as a dependency on the controller we are trying to construct. Lets do that right now.

We have now registered the IEncoderService with the container and told it that the EncoderServiceBase64 is to be injected into any object requiring an implementation of the IEncoderService. In addition we have told the container to use a ContainerControlledLifetimeManager, which means that we will share the same instance of the EncoderServiceBase64 service across all instance that require it, this in effect is a Singleton Pattern implementation [ http://en.wikipedia.org/wiki/Singleton_pattern ].

Finally let us now rerun the application and see if we are exception free.

The View source is below

Let us now enter some text to Base64 Encode

If we submit this query we can see that we hit the breakpoint I have set in the injected Encoder Service. This proves that Unity has composed the Controller for us as expected.

Let us continue with the application execution and hopefully see the end result of the encoded operation.

The View source is below

This article has covered a lot of ground and introduced a lot of new concepts, ultimately the point of development is producing good quality software that can be expanded and maintained with relative ease, while IOC and DI are not the complete answer they do form part of the solution. IOC and DI should be in every professional developer’s toolbox we hope this article has demonstrated both the theory and the real world power of Inversion of Control and Dependency Injection.

Author

Beth

follow @martinsharp

It’s been a long time coming but I’ve finally added a Fluent Management Wiki online. Thankfully Azure Websites makes it very easy to add WordPress instances 🙂

Anyway, the changelist is immense from version 0.4 to 0.4.1.6 but the most notable is IaaS support. There is also some bug fixes and quite a few breaking interface changes.

My hope is that the Wiki will generate enough feedback for us to understand how people are using it, what they want to see and what their issues are so please feel free to be brutal. It’s only going to turn into a great library if you are!

The Wiki can be viewed @ http://fluentmanagement.elastacloud.com

White Box BDD is built around the concept of writing meaningful Unit tests. With White Box Behaviour Driven Development sometimes referred to as SDD or Specification Driven Development we are trying to provide a behaviour statement around an object or a tree of objects which supply a given set of functionality.

White Box BDD attempts to provide a way to describe the behaviour within a system unlike black box BDD which classically attempts describe a behaviour of a whole vertical slice of a system.

My own development setup is as follows:

· Windows 8 http://windows.microsoft.com/en-US/windows-8/release-preview

· Visual Studio 2012 http://www.microsoft.com/visualstudio/11/en-us

· Resharper 7 http://www.jetbrains.com/resharper/whatsnew/index.html

· NUnit http://nuget.org/packages/nunit

· NUnit Fluent Extensions http://fluentassertions.codeplex.com/

I find the above combination of software packages to be absolutely stunning as a development environment and Windows 8 is absolutely the most productive Operating System I have ever used across any hardware stack, as always without Jet Brains Resharper Visual studio feels almost crippled. NUnit is my go to testing framework but of course you have MBUnit, XUnit and for those who must stick with a pure Microsoft ALM style experience you have MSTest. Overlaying a Fluent extensions library allows me to write more fluent and semantic assertions which falls in line with the concepts of White Box BDD. For most of the frameworks above there are similar sets of extensions available, MSTest is to my knowledge the exception to the rule.

The http://fluentassertions.codeplex.com/ framework is particularly powerful as this allows you to chain assertions as shown by some of the examples taken from their site below.

var recipe = new RecipeBuilder()

.With(new IngredientBuilder().For(“Milk”).WithQuantity(200, Unit.Milliliters))

.Build();

Action action = () => recipe.AddIngredient(“Milk”, 100, Unit.Spoon);

action

.ShouldThrow<RuleViolationException>()

.WithMessage(“change the unit of an existing ingredient”, ComparisonMode.Substring)

.And.Violations.Should().Contain(BusinessRule.CannotChangeIngredientQuantity);

And

string actual = “ABCDEFGHI”;

actual.Should().StartWith(“AB”).And.EndWith(“HI”).And.Contain(“EF”).And.HaveLength(9);

There are other choices and the techniques contained in this article can be used with earlier versions of the Microsoft development environments and indeed in most modern languages you can find tooling support including but not limited to:

· Java – Intilij, Eclipse + JUnit

· Php

· Ruby On Rails – Ruby Mine

In fact the techniques can be universally applied to any modern development environment and I am yet to come across a project that cannot be tested where it has been correctly conceptualized and developed in line with SOLID http://en.wikipedia.org/wiki/Solid_(object-oriented_design) principals.

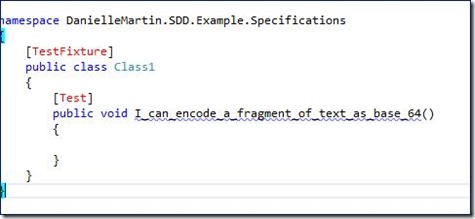

First step is to create a specification library because we are going to use our specifications to drive our package and project choices we do not start with a production code project such as an ASP MVC project but instead we only need at this stage add our specification library.

This introduces an important principal which drives White Box BDD called Emergent Design this principal says that the natural act of coding will force the correct design for the application to emerge and therefore design upfront with the exception of big block conceptualization is invalid and in fact we should allow our architecture to naturally emerge.

So with this principal in mind let us open the new project dialog and add a class library.

Looking at the image above you can see that we have used the .Specifications extension to the project name which will naturally be reflected in the project namespace, this is my personal preference and for me implies the intent of the project.

For the scope of this demonstration I am going to keep the requirements very simple I will outline them below using Gerkin’esk style syntax.

Given that I have a set of text

When I pass the text into the Encoder

Then I expect to be given back a correctly encoded base 64 fragment of text

Next we write a test definition you can see that the TestFixture and Test have not been resolved.

This is because we will not add frameworks till we need them, so we will add them next.

Next we add the packages via Nuget

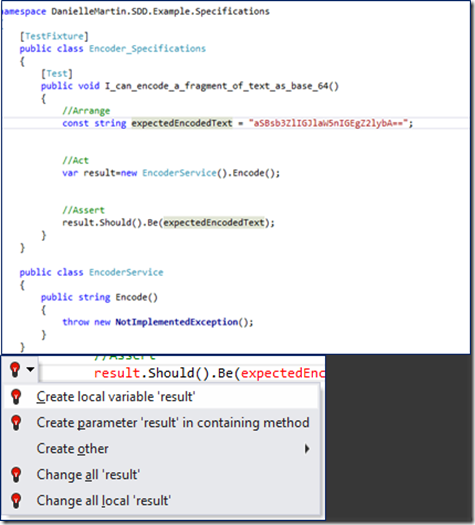

The next step is to add the acceptance criteria. It is crucial that this is the first step we start with

What you will notice is that Resharper has marked the things it doesn’t know about in RED. We now follow the RED clicking on the RED and using Resharper’s Alt+Enter command helps us to do this.

When we have finished following the RED we find we have driven out a basic shell for the encoder

While I was getting the expectedEncodedText from an online base 64 encoder site I released that Encode() needed a parameter passing in for the source Text so let’s add this. It’s worth noting we didn’t forget about this but rather we waited till we were driven to implement it. This Is fundamental to this style of coding.

With a little typing to add the parameter sourceText in the test which of course went RED , then a few Alt+ Enter’s we see that the parameter is pushed down into our EncoderService class.

Next we run the test and we see that we are RED and we hit The NotImplementedException

The next step we need to make the test pass or go green this will give to us a guarantee of the behaviour or specification as expressed by the assertion.

First of all I add the base64 encoding code

Lets run the test again.

We now find that we are Green.

This is a rather trivial example but what have we done here in easy to understand points:

· One we described a behaviour or rather a specification for a behaviour using the test name and the Assert.

· Two we drove out the code directly from the Test using Resharper Magic.

· Three our end point was a passing specification.

I hope this example has been useful if basic.

Thanks

Beth

I remember being on a project some 9 years ago and having to build one of these. To build a realtime gateway is not as easy as you would think. In my project there were accountants uploading invoices of various types and formats that we had to translate into text using an OCR software package. We built a workflow using a TIBCO workflow designer solution (which I wouldn’t hesitate now to replace with WF!)

At a certain point people from outside the organisation had the ability to upload a file and this file had to be intercepted by a gateway before being persisted and operated on the through the workflow. You would think that this was an easy and common solution to implement. However, at the time it wasn’t. We used a Symantec gateway product and its C API which allowed us to use the ICAP protocol and thus do real time scanning.

For the last 6 months I’ve wanted to talk about Microsoft Endpoint Protection (http://www.microsoft.com/en-us/download/details.aspx?id=29209) which is still in CTP as a I write this. It’s a lesser known plugin which exists for Windows Azure. For anybody that receives uploaded content, this should be a commonplace part of the design. In this piece I want to look at a pattern for rolling your gateway with Endpoint Protection. It’s not ideal because it literally is a virus scanner, enabling real time protection and certain other aspects but uses Diagnostics to show issues that have taken place.

So initially we’ll enable the imports:

<Imports> <Import moduleName="Diagnostics" /> <Import moduleName="Antimalware" /> <Import moduleName="RemoteAccess" /> <Import moduleName="RemoteForwarder" /> </Imports>

You can see the addition of Antimalware here.

Correspondingly, our service configuration gives us the following new settings:

<Setting name="Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString" value="<my connection string>" /> <Setting name="Microsoft.WindowsAzure.Plugins.Antimalware.ServiceLocation" value="North Europe" /> <Setting name="Microsoft.WindowsAzure.Plugins.Antimalware.EnableAntimalware" value="true" /> <Setting name="Microsoft.WindowsAzure.Plugins.Antimalware.EnableRealtimeProtection" value="true" /> <Setting name="Microsoft.WindowsAzure.Plugins.Antimalware.EnableWeeklyScheduledScans" value="false" /> <Setting name="Microsoft.WindowsAzure.Plugins.Antimalware.DayForWeeklyScheduledScans" value="7" /> <Setting name="Microsoft.WindowsAzure.Plugins.Antimalware.TimeForWeeklyScheduledScans" value="120" /> <Setting name="Microsoft.WindowsAzure.Plugins.Antimalware.ExcludedExtensions" value="txt|rtf|jpg" /> <Setting name="Microsoft.WindowsAzure.Plugins.Antimalware.ExcludedPaths" value="" /> <Setting name="Microsoft.WindowsAzure.Plugins.Antimalware.ExcludedProcesses" value="" />

The settings are using Endpoint Protection for real time protection and scheduled scan. It’s obviously highly configurable like most virus scanners and in the background will update all malware defitions securely from a Microsoft source.

First thing we’ll do is download a free virus test file from http://www.eicar.org/85-0-Download.html. Eicar has ensured that this definition is picked by most of the common virus scanning so Endpoint Protection should recognise this immediately. I’ve tested this with the .zip file but any of them are fine.

The first port of call is setting up diagnostics to proliferate the event log entries. We can do this within our RoleEntryPoint.OnStart method for our web role.

var config = DiagnosticMonitor.GetDefaultInitialConfiguration();

//exclude informational and verbose event log entries

config.WindowsEventLog.DataSources.Add("System!*[System[Provider[@Name='Microsoft Antimalware'] and (Level=1 or Level=2 or Level=3 or Level=4)]]");

//write to persisted storage every 1 minute

config.WindowsEventLog.ScheduledTransferPeriod = System.TimeSpan.FromMinutes(1.0);

DiagnosticMonitor.Start("Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString", config);

Okay, so in testing it looks like the whole process of cutting and pasting the file onto the desktop or another location takes about 10 seconds for the Endpoint Protection to pick this up and quarante the file. Given this we’ll set the bar at 20 seconds.

I created a very simple ASP.NET web forms application with a file upload control. There are two ways to detect whether the file has been flagged as malware:

We’re going to focus on No.2 so I’ve created a simple button click event which will persist the file. Endpoint protection will kick in within the short period so we’ll write the file to disk and then pause for 20 seconds. After our wait we’ll then check the eventlog and in the message string we’ll have a wealth of information about the file which has been quarantined.

bool hasFile = fuEndpointProtection.HasFile;

string path = "";

if(hasFile)

{

path = Path.Combine(Server.MapPath("."), fuEndpointProtection.FileName);

fuEndpointProtection.SaveAs(path);

}

// block here until we check endpoint protection to see whether the file has been delivered okay!

Thread.Sleep(20000);

var log = new EventLog("System", Environment.MachineName, "Microsoft Antimalware");

foreach(EventLogEntry entry in log.Entries)

{

if(entry.InstanceId == 1116 && entry.TimeWritten > DateTime.Now.Subtract(new TimeSpan(0, 2, 0)))

{

if(entry.Message.Contains(value: fuEndpointProtection.FileName.ToLower()))

{

Label1.Text = "File has been found to be malware and quarantined!";

return;

}

}

}

Label1.Text = path;

The eventlog entry should look like this, which contains details on the affected process, the fact that it is a virus and also some indication on where to get some more information by providing a threat URL.%%860 4.0.1521.0 {872DA7D0-383A-4A18-A447-DC4C7E71785F} 2012-08-12T09:31:18.362Z 2147519003 Virus:DOS/EICAR_Test_File 5 Severe 42 Virus http://go.microsoft.com/fwlink/?linkid=37020&name=Virus:DOS/EICAR_Test_File&threatid=2147519003 3 2 3 %%818 D:\Windows\System32\inetsrv\w3wp.exe NT AUTHORITY\NETWORK SERVICE containerfile:_F:\sitesroot\0\eicar_com.zip;file:_F:\sitesroot\0\eicar_com.zip->(Zip);file:_F:\sitesroot\0\eicar_com.zip->eicar.com 1 %%845 1 %%813 0 %%822 0 2 %%809 0x00000000 The operation completed successfully. 0 0 No additional actions required NT AUTHORITY\SYSTEM AV: 1.131.1864.0, AS: 1.131.1864.0, NIS: 0.0.0.0 AM: 1.1.8601.0, NIS: 0.0.0.0Okay, so this is very tamed example but it does prove the concept. In the real world you may even want to have a proper gateway which acts as a proxy and then forwards the file onto a "checked" store if it succeeds. We looked at the two ways you can check to see whether the file has been treated as malware. The first, checking to see whether the file has been deleted from it's location is too non-deterministic because although "real time" means real time we don't want to block and wait and timeout on this. The second is better because we will get a report if it's detected. This being the case, a more hardened version of this example will entail building a class which may treat the file write as a task and asynchronously ping back the user if the file has been treated as malware - something like this could be written as an HttpModule or ISASPI filter pursue the test and either continue with the request or end the request and return an HTTP error code to the user with a description of the problems with the file.

Happy trails etc.